EU Formally Adopts World’s First AI Law

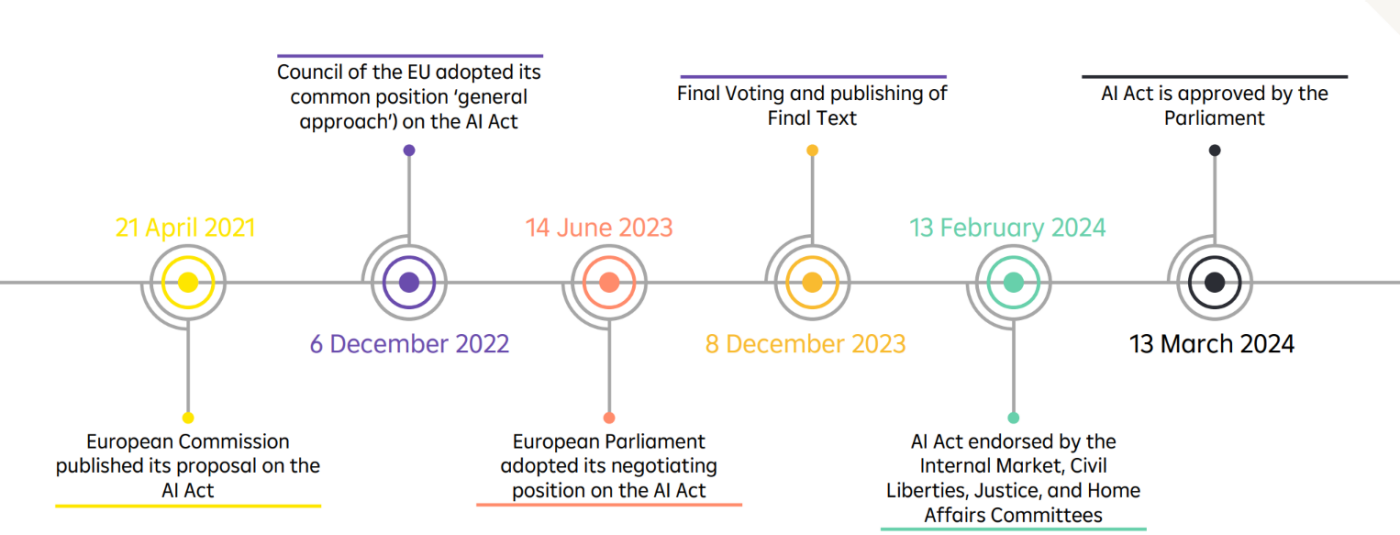

On 13 March 2024, Parliament approved the Artificial Intelligence Act that ensures safety and compliance with fundamental rights, while boosting innovation. The regulation, agreed in negotiations with member states in December 2023, was endorsed by MEPs with 523 votes in favour, 46 against and 49 abstentions.

What the AI Act covers…

- emphasizing the ethical application of AI, communicating European values while improving transparency.

- establishing a process and roles to enforce quality at launch and throughout the life cycle.

- fostering collaboration and protecting fundamental rights of EU citizens in the age of AI.

How it intends to achieve that…

- incorporating a single standard across the EU to prevent fragmentation, enforced through Conformity Declarations and the obligation for a CE marking.

- ensuring legal certainty that encourages innovation and investment into AI by creating AI Regulatory Sandboxes.

- enabling National competent authorities as control instances. These instances will update an EU database for high-risk AI practices and systems.

The AI Act does not apply to:

- areas outside the scope of EU law and should not affect members states' competencies in national security;

- AI systems used exclusively for military or defense purposes;

- AI systems used solely for the purpose of research and innovation;

- AI systems used by persons for non-professional reasons.

There are four groups in which the Act categorizes AI systems:

- prohibited,

- high risk,

- low risk, and

- minimal risk.

You can find out more about the AI Act here: The ‘AI Act’: A Milestone in Harmonizing Artificial Intelligence Regulations (rbinternational.com).

Financial Institutions and AI Regulation AI systems used for credit scoring and risk assessment in insurance may be classified as high-risk due to their impact on individuals’ financial access and well-being. However, AI systems for fraud detection in financial services are not considered high-risk under the AI Act. Compliance with high-risk system requirements involves adherence to stringent rules on internal governance and risk management processes. Financial supervisory authorities will oversee compliance with the AI Act, integrating market surveillance activities into existing supervisory practices. Non-compliance penalties range from €7.5m to €35m or 1.5% to 7% of annual turnover, depending on the infringement category and company size.

Next steps:

For inquiries please contact:

regulatory-advisory@rbinternational.com

RBI Regulatory Advisory

Raiffeisen Bank International AG | Member of RBI Group | Am Stadtpark 9, 1030 Vienna, Austria | Tel: +43 1 71707 - 5923